In this Episode of The Human Upgrade™...

BOOKS

4X NEW YORK TIMES

BEST-SELLING SCIENCE AUTHOR

AVAILABLE NOW

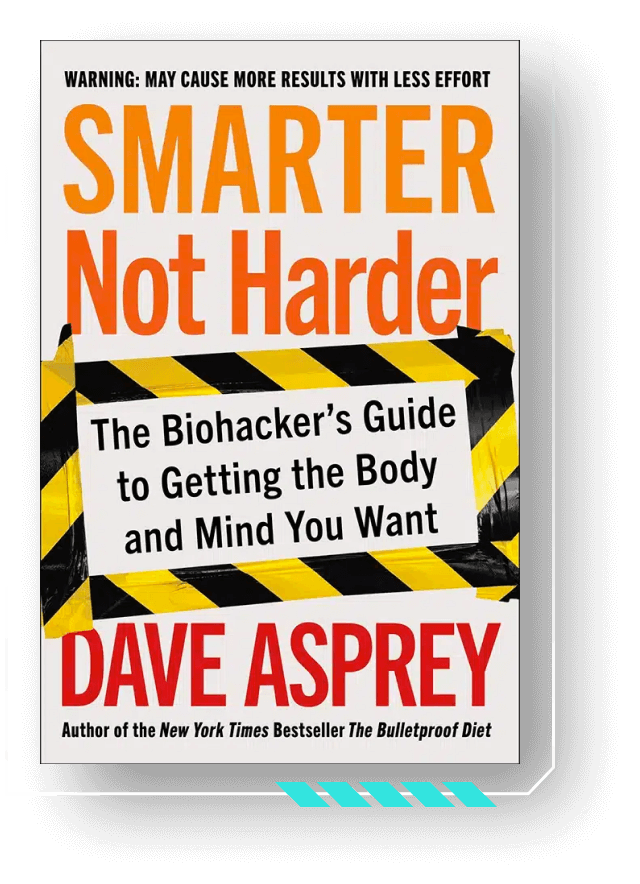

Smarter

Not Harder

Smarter Not Harder: The Biohacker’s Guide to Getting the Body and Mind You Want is about helping you to become the best version of yourself by embracing laziness while increasing your energy and optimizing your biology.

If you want to lose weight, increase your energy, or sharpen your mind, there are shelves of books offering myriad styles of advice. If you want to build up your strength and cardio fitness, there are plenty of gyms and trainers ready to offer you their guidance. What all of these resources have in common is they offer you a bad deal: a lot of effort for a little payoff. Dave Asprey has found a better way.